Google recently released MusicLM AI. While many AI can do different things, Google AI is unique and requires an expert to do the process daily. It starts with creating Art and Creating relevant images. Now, Google MusicLM AI Generate Music from Text. Google published a webpage consisting of all samples, and we expect the public rollout soon. So, When you provide the situation and what you want, Google can create a piece of music for you.

MusicLM AI

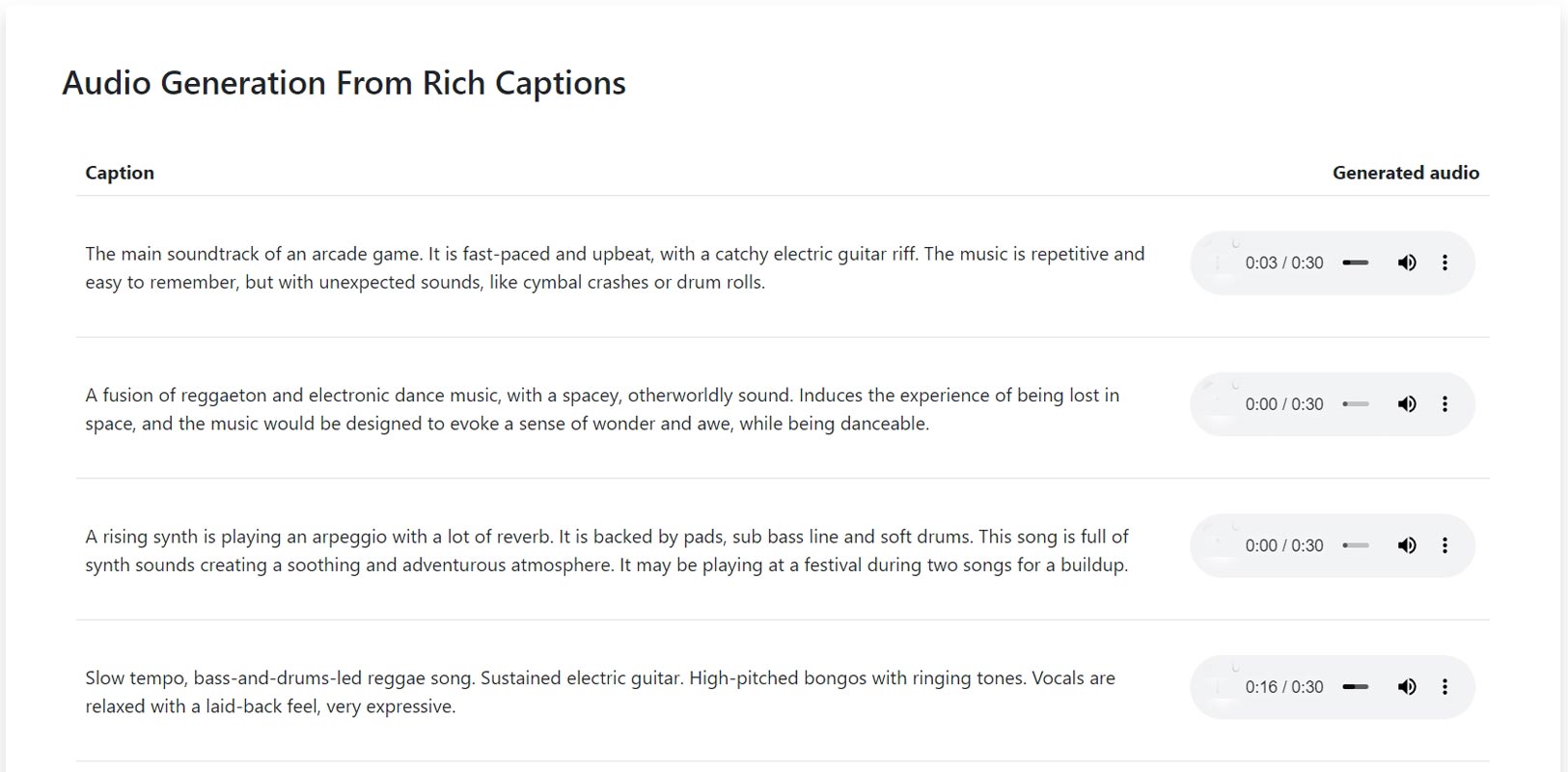

Google researchers have developed an AI, MusicLM, that can generate musical pieces of several minutes in length based on text prompts. Additionally, it can also take a melody whistled or hummed by a person and transform it into other instruments, similar to how DALL-E generates images from written prompts. The public can’t interact with MusicLM, but Google has made available a variety of samples generated by the model for listening.

The model uses a hierarchical sequence-to-sequence modeling task to generate music at 24 kHz and produce consistent music over several minutes. It has outperformed previous systems regarding audio quality and adherence to the text description. MusicLM can also transform whistled and hummed melodies into different styles of music according to the text description, which allows it to be conditioned on both text and tune. To support further research, the company has publicly released MusicCaps, a dataset of 5.5k music-text pairs with detailed text descriptions provided by human experts.

MusicLM approaches the task of conditional music generation using a hierarchical sequence-to-sequence modeling method and can produce music at 24 kHz that stays consistent over several minutes. The experiments show that MusicLM outperforms previous systems’ audio quality and alignment with the text description.

From Researchers perspective

The researchers behind MusicLM have developed a model that can generate high-fidelity music from text descriptions, such as “a calming violin melody backed by a distorted guitar riff.” They also show that the model can be conditioned on both text and a melody, allowing it to transform whistled and hummed pieces according to the style described in the text caption.

To support further research, the team has also made available MusicCaps, a dataset of 5.5k music-text pairs with rich text descriptions provided by human experts.

Features

Google researchers have made available 30-second snippets of music generated by MusicLM, which sound like actual songs, created from paragraph-long descriptions that specify a genre, mood, and specific instruments. It can generate Five-minute-long pieces from one or two words, such as “melodic techno.” One of the most exciting demonstrations is the “story mode,” where the model is a script and morphs between different prompts.

The voices generated by MusicLM have a realistic tonality and overall sound. But they also have an artificial quality that can be described as grainy or staticky. This quality could be more evident in some examples but can be heard clearly in others. MusicLM can also simulate human vocals, although the resulting sound may be slightly off.

Can It work on Real World Requirements?

The concept of AI-generated music has a long history dating back decades. Many different systems are being developed to compose pop songs. Replicate Bach’s compositions better than humans and even perform live. One recent version utilizes the AI image generation engine, StableDiffusion, to convert text prompts into spectrograms which are then transformed into music.

According to the paper, MusicLM outperforms previous systems regarding quality and adherence to the caption. It can take in audio and copy the melody. This last aspect is one of the most impressive demonstrations of the model’s capabilities. The researchers have made available an online demonstration. Users can input their humming or whistling of a melody. They also can hear how MusicLM reproduces it as an electronic synth lead, string quartet, guitar solo, etc. MusicLM handles the task very well from the examples that were listened to.

How Google MusicLM AI works?

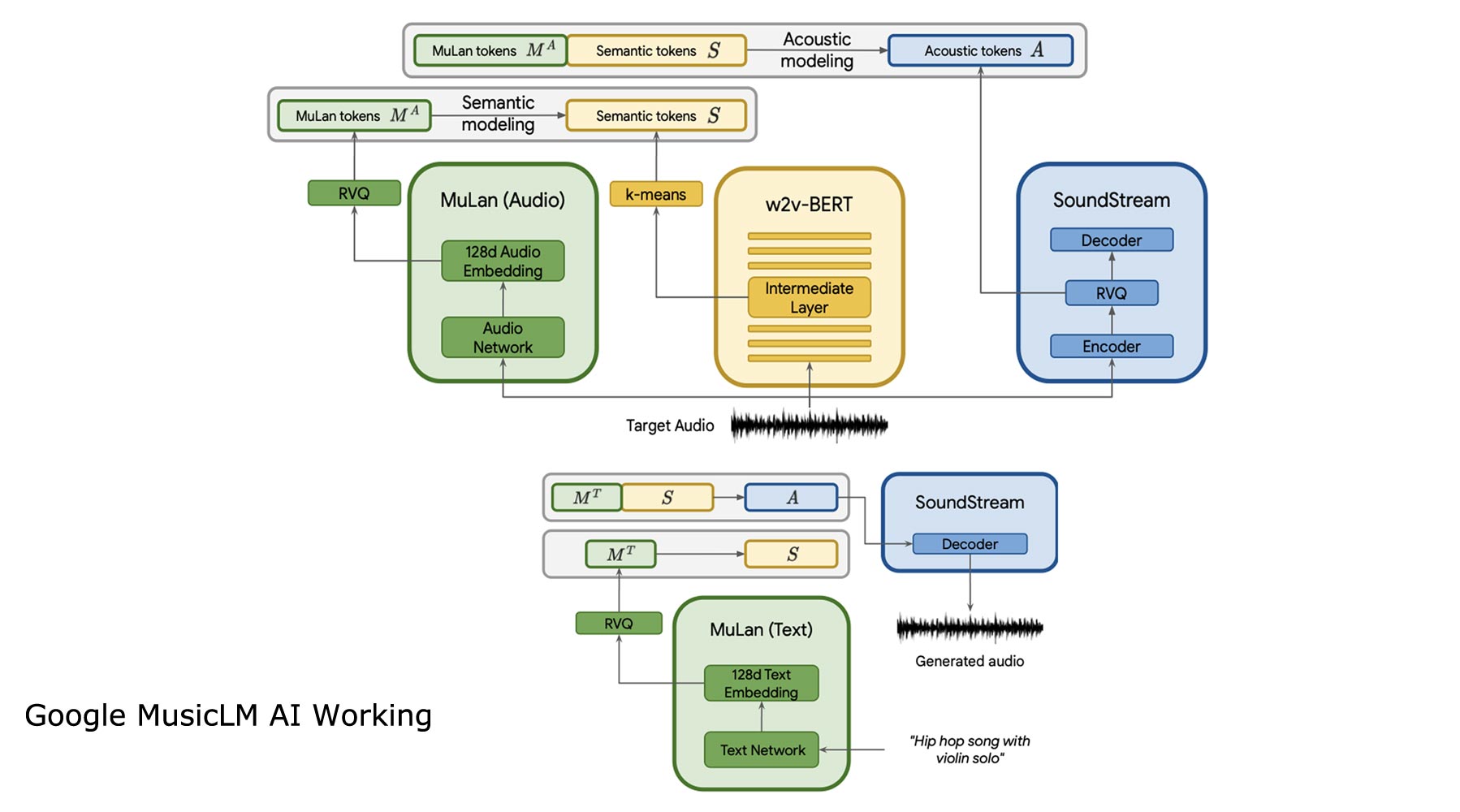

The image represents the workflow of MusicLM, an AI model that generates high-fidelity music from text descriptions. The process starts with providing text descriptions of the desired theme, like “a calming violin melody backed by a distorted guitar riff,” to the model.

The model then uses a hierarchical sequence-to-sequence modeling task. It can generate music at 24 kHz that remains consistent over several minutes.

MusicLM can also adapt to a melody provided. It can take a whistled or hummed song and transform it according to the style described in a text caption. The generated music is then evaluated based on its audio quality and adherence to the text description.

To support future research, the company has made available a dataset called MusicCaps. It includes 5.5k music-text pairs. All of which have rich text descriptions written by human experts.

Wrap Up

MusicLM is a powerful AI model that can generate high-fidelity music from text descriptions. It allows AI to transform whistled and hummed pieces according to the style described in a text caption. Both text and melody can condition the model. The researchers have demonstrated that the model can produce realistic and high-quality audio output that is consistent over several minutes.

The researchers have also publicly released MusicCaps. It consists of a dataset of 5.5k music-text pairs with detailed text descriptions provided by experts. It will support future research in this field. Overall, MusicLM is a significant step forward in a lot of AI-generated music and has the potential to revolutionize the music industry.

Source, (2), (3– Downloadable PDF)

Selva Ganesh is the Chief Editor of this Blog. He is a Computer Science Engineer, An experienced Android Developer, Professional Blogger with 8+ years in the field. He completed courses about Google News Initiative. He runs Android Infotech which offers Problem Solving Articles around the globe.

Leave a Reply